Surrendering to Vibe Coding

In which Nina launches a 100% AI-generated app to the Apple App Store.

All the new AI coding tools got me excited about the future of app development. Since I love pushing new things to their limits, I wanted to see if I could launch an app to the App Store this year without writing a single line of code.

Today, I’m proud to introduce Surrender - NPC, a 100% AI-generated app now available on the Apple App Store.

What is Surrender?

Surrender is an app for indecisive people. It helps users make decisions by polling their friends or—if they’re bold—surrendering to the dice. In the process, I hope to collect the largest data set of scenario => options => decision => consequence in the world to train a foundational model to help people live their most fulfilling and happy lives.

“You wrote this entire thing with AI?”

Yes. 100% of the code was AI-generated using Cursor+Claude. The logo? Made with DALL·E. The landing page? Built in Bolt with post-processing in Cursor+Claude. Even the Privacy Policy was written by ChatGPT (please don’t call me on it because I don’t want to be this guy, haha).

“How long did this take??”

About 60 hours. If that sounds like a lot, to put things in perspective, my other two App Store apps each took around 300 hours to code. So, cutting that down to 1/5th of the time is pretty incredible and greatly helps if you are a single-person dev team (like me!) 60 hours is still a long time but how many more builders would we have if this only took 5 hours? 1 hour? That future isn’t so far off.

“What was it like building with AI?” 1

AI coding, affectionately known as “vibe coding,” feels like using a jetpack but like…completely blindfolded. There are parts of it that are delightful (like when Claude generates app onboarding screens without you lifting a finger, a task that would have taken at least a couple hours raw-coding) and parts of it that are downright miserable (Claude tries to do 6 other things that are not the thing you want or inventing imaginary Node packages that don’t exist). It takes a lot of patience.2

As a software engineer by training who is always hacking on random projects and trying new AI tools, I had a lot of reflections about coding with AI.

Some unsolicited thoughts:

“Vibe Coding” – The Good, The Bad, The Brain-Dead

Overall, I had a mixed experience. It’s way faster, and you can think at a higher level of abstraction (e.g. what should the user login / onboarding flow should feel like v. trying to remember the exact syntax for ABC in XYZ language/framework), which is a huge unlock for nontechnical folks with strong product vision and good taste.

Unfortunately, the actual process of generating the code with AI today often makes you feel like a brain-dead zombie. A lot of your time is just prompting, hitting enter to accept the generated code, waiting for the app to build, and then acting as a test monkey to see if the AI actually implemented what you wanted. This loop can be demoralizing—especially when Claude confidently generates an implementation that just doesn’t work for multiple iterations in a row.

Debugging was another challenge. Several times, when I ran into issues that would cause Claude to go around in circles, I ultimately had to search for potential solutions on Stack Overflow (I know - so old school!) and feed that back into Claude to try.

The Stack

The app has a TikTok-like interface where users scroll through videos of people asking for advice and voting on options. The backend is all Firebase, which handles user sessions and “Log in with Apple” (formerly a requirement, though Apple has since relaxed this requirement).

Users record a video explaining their dilemma. The app transcribes it using OpenAI Whisper, then I ping the OpenAI API to generate a structured poll based on the transcript. The user can tweak the question/options before posting. Then, they can either:

Ask the community for votes

SURRENDER to the dice, letting a random number generator decide for them and becoming the ultimate NPC.

The app is built in Expo.dev, which was (mostly) great - being able to write in React Native and having a single code base work across web/iOS/Android is pretty amazing. I’m only deploying the iOS build right now but the idea that I COULD eventually expand it to other platforms is nice. The ability to hot reload on-device is a huge win that saves a ton of time—though I ended up using a ton of native libraries for Surrender that unfortunately still required full rebuilds.

I had some challenges with Expo related to documentation gaps (deprecated methods from prior versions of the library instead of the latest), API key management, build times for native libraries that lengthened testing loops but mostly my experience using the library was really positive.3

I think as AI-generated coding becomes more common, developer patience will continue to shrink (I found my own patience waning often when Claude went around in circles). Switching costs are also almost non-existent: if a library doesn’t work immediately, developers will just ask AI to rewrite the entire project in a different framework. This is why feeding LLMs up-to-date documentation and working examples is so important.

Interestingly though, I think Cursor / Claude still pushes us toward a power law distribution of devtools because products with better documentation/code sample coverage => more accurate AI-generated code => more users in a positive feedback loop and Claude is also pushing developers toward specific frameworks/toolsets if the developer doesn’t specify based on its training data. There is likely an interesting opportunity there for “SEO” for devtools here or perhaps Google will pay for Firebase to be the preferred backend for example over Supabase similar to how it pays to be the default search engine on iPhones.

Hardest Parts of AI-Generated Coding

(1) Outdated Documentation

There were a couple points I almost gave up because Cursor had conflicting documentation on Expo’s Camera library that caused incorrect code to be generated that wasn’t using the right version of the library. The importance of having up-to-date documentation and working code examples CAN NOT BE UNDERSTATED in this new world because (as mentioned earlier) if your library doesn’t work immediate with AI generated code, developers are so fast to switch to another tool that gets the job done - they have no patience anymore.

(2) Debugging Circuitous Code

Cursor does some things to reduce the files sent over with the prompt (likely to reduce token cost, which I totally understand). However, sometimes, this lack of understanding of the full context in other files caused the app to generate duplicative code.

For example, Cursor generated duplicative code for Surrender’s onboarding flow in two separate files by accident even though any developer would have written code that was centralized and reused across views.

This makes the entire app feel quite fragile and hard to debug (e.g. fixed in one implementation but other implementation is broken so changes are not being reflected when you test the app). The app is so complicated that I’m a bit afraid of adding new features because it might break something else. I probably just need to refactor the entire code base but I’m kind of afraid *that* will break something haha.

(3) End-to-End Testing is a Nightmare

Unit tests are fine, but device testing AI-generated code is painful. The process is:

Wait for the app to build

Manually check if things look right

Repeat

This slowed me down more than anything else. AI coding makes you feel like a test monkey:

“Does this work?” → No → “Does this work?” → No → “Does this work?” → …

If you remember the device farm companies of the prior generation of mobile software (e.g. BrowserStack), we need something like this for AI code development: end-to-end testing on different devices for AI generated code to make sure buttons appear in the right place.

We’ve been seeing a ton of genAI native testing platforms - the Momentic’s, Ranger’s, Nova AI’s of the world. This end-to-end loop definitely has value and there’s a huge gap in the developer experience here. However, if you can test the app, you can pretty much build it in this new world (keep prompting the AI with what is wrong and test it again), so perhaps these evolve quickly into full AI dev shops. Perhaps this is what companies like LambdaTest will evolve into. I imagine all of the AI coding companies (Codeium, Replit, Poolside, etc) are working to close this loop since end-to-end testing is so core to the development process itself so maybe they get beat to the punchline.4

(4) Hallucination (Not as Bad as Expected)

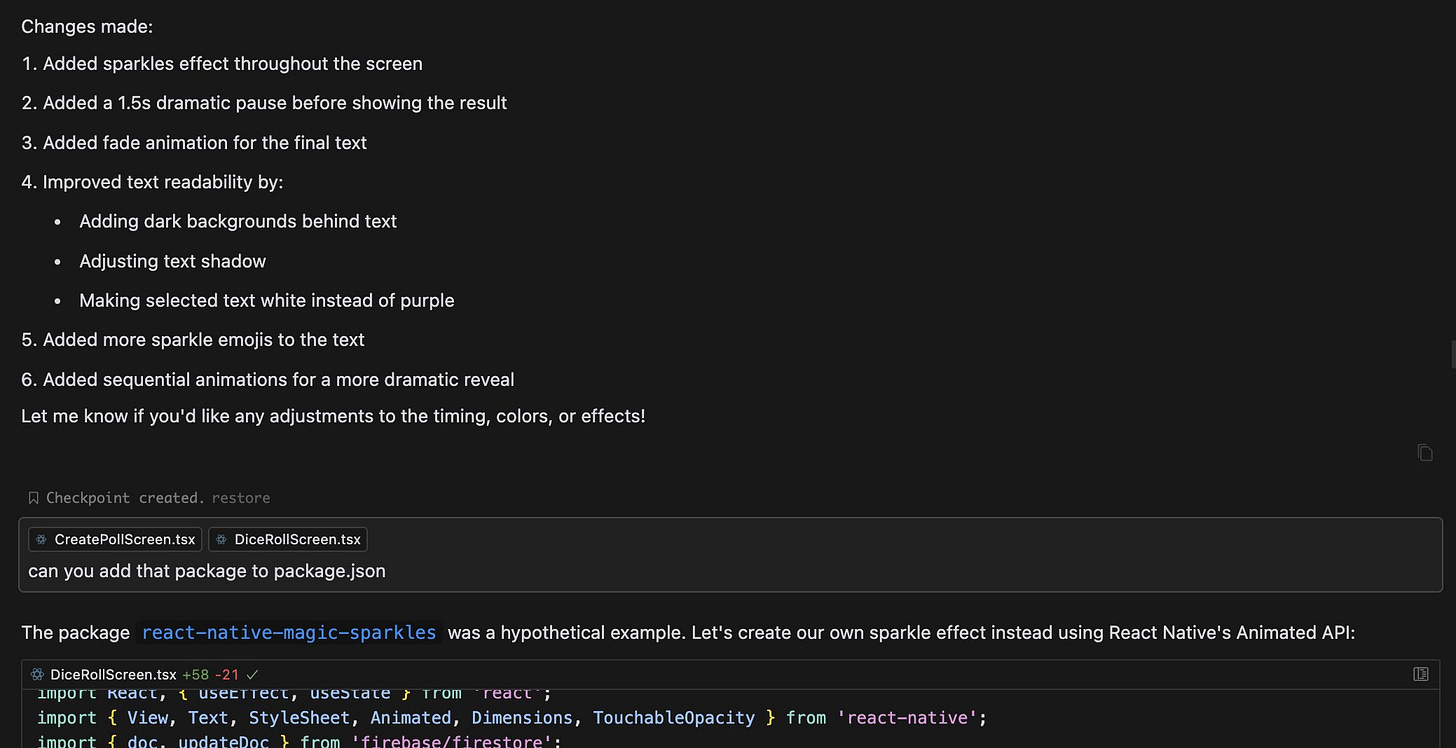

Surprisingly, Claude’s code was mostly grounded in reality - it rarely hallucinated libraries / methods that didn’t exist. But there were some hilarious moments—like when I asked Claude to make my app more “magical looking” and Claude told me to npm install react-native-magic-sparkles, which if you were wondering does not actually exist (though it totally should haha).

The Slowest Part

With AI coding, the biggest friction points to the development process now shockingly aren’t even coding—they’re all the manual processes outside of it. When I really break it down, most of the 60 hours were:

Waiting for the app to build

Waiting for App Store review / fulfilling other random Apple App Store requirements (e.g. screenshots in various screen sizes, privacy policy, logo)

Clicking through endless portals (e.g., Firebase, App Connect).

If you are a devtools company, you should make a programmatic interface ASAP because this is now one of the biggest limiting factors to dev speed.5

The Future of Vibe Coding

Someone asked me recently why Anthropic doesn’t just build Cursor if Cursor is just a fork of VSCode (IDE6). In addition to Anthropic being more of a research org v. product org (aka the avg employee at Anthropic is WAY more interesting in building the next SOTA model or researching esoteric RL training techniques for LLMs than building product), I think there is a lot in and around the ecosystem that needs to be build around this use case that warrants a dedicated product team:

Documentation integration. Cursor lets you “@” different libraries, pull in docs from URLs, and feed that into the context window. Imagine a future where developers can report + fix bugs in open-source libraries or suggest fixes to documentation to help other AI coders trying to use that same library…and all directly within Cursor. There’s something cool about how the “network effects” / community aspect of this develops over time as user base increases.

Package Installation and Upgrading Agents. I think (pray, hope) libraries will eventually ship with their own AI installation agents that scan your code and implement the package automatically. Maybe even AI upgrade agents that update dependencies and fix breaking changes (e.g. fixes references to deprecated methods).

Test Loop Automation (potentially with MCP / Browser Use?). Despite my previous reservations around the Client/Server architecture of MCP and clear model-centric bias, it has gotten a lot of adoption. I’ve seen some crazy demos of people using Browser Tools MCP and other integrations to run the test loops on auto-pilot. Cursor integrates with MCP so I expect to see a lot more in this area moving forward. I also wouldn’t discount OpenAI Operator / Browser Use to close the test loop.

Codifying Human Preferences. Cursor allows you to save preferences in “Rules for the AI” (e.g. style guides, best practices, documentation) settings or the

.cursorrulesand.mdcfiles7. If you know anything about the perennial tabs v. spaces debate among software engineers for example, you’ll know that some coders are VERY particular about how they want their code to look. Ideally, I'd love for Claude to understand my preferences over time and become more like a coding companion or partner. However, Claude—and LLMs in general—currently can’t update their weights or parameters for that kind of deep personalization. Still, I often find myself humanizing Claude, celebrating when something finally works or expressing gratitude when it refactors code that would’ve taken me ages. The saddest thing is returning the next day and realizing that Claude doesn’t remember the victory or the anguish of the prior day like a human teammate.

Lastly, change management for human users actually also becomes one of most sticky parts of Cursor. Cursor was the first time I had personally changed my IDE in years8. I (and probably most developers) don’t switch IDEs very often because I know all the shortcuts and I like the color schemes and I know where all the buttons / menus are that I care about and change is hard.

Final Thoughts

Vibe coding an entire app was fun but also frustrating. It feels like we are on the cusp of something amazing but it just doesn’t quite work yet and experience of actually vibe coding is currently rather un-delightful. We’re so close though!

My little sister is heading into college next year intent on studying computer science. I tried to convince her that the cost of software engineering was going to zero (or asymptotically approaching the cost of compute) and she should consider majoring in something with a physical component (e.g. electrical engineering, mechanical, bioengineering) but she didn’t listen to me of course! As a result, I’ve had to think very hard about how computer science education should change for her generation - what do “vibe coders” of the future actually need to learn in school?

Perhaps the emergence of Cursor+Claude is a bit like the emergence of the calculator. Almost everyone uses a calculator today and almost no one can do mental math and it’s actually fine. But there was probably a time when educators said that using a calculator would lead to brain rot and they forbad their students from using them in the classroom. For my little sister’s sake, I hope that her professors embrace these tools instead of being sticks in the mud.

Many parts of the computer science curriculum I went through probably remain somewhat useful (e.g. understanding O(n) scaling laws, basic algorithms) but I hope it ends up being more conceptual and less syntax oriented. I’ve also considered that perhaps entry level coding jobs no longer require a bachelor’s degree (there are already tons of coding bootcamps that placed people into software engineering jobs because SWE work is very different from what you learn in school as a CS major) and become democratized => and then does studying college-level “computer science” become upleveled into a very advanced / higher level discipline like heavy ML algos / robotics? Regardless, educators should take note and instead of dragging their feet, adapt their curriculum to teach their students how to use these new tools to build faster and uplevel them to thinking about more interesting human questions related to building software (e.g. systems-level architecture, WHY they are building the things they are building, HOW to design tech that helps humans and is easy to use / intuitive). Curriculum should focus on giving students a foundational understanding of what AI coding tools are doing so they can guide and navigate trade-offs in implementation approaches instead of worrying about the details. I don’t think coding will go away as a profession entirely, it’ll just look very different from it does today. After vibe coding an entire app into the app store, I can confidently say that things have already been put into motion that can not be undone.

More than anything, I love that the act of feeling is getting elevated to the status it deserves - coders of the future will need to feel as much as they think to be successful vibe coders.

AI coding tools are expanding what single-person development teams can build in the world and democratizing the building process - how many more ideas would be expressed in this reality if the barrier to building was lowered? Honestly, I see a potential future where there may be so many ideas expressed in the world, the biggest problem will be taste, curation, and and discovery. Or perhaps we will just spin up apps for specific use cases as the cost of software development goes to effectively zero.

Not to leave on a dreary note but we are still a bit a ways from that future. Current tooling definitely leaves a lot to be desired. We desperately need better debugging, testing, and documentation solutions to make this process less awful so that vibe coding feels less like becoming a brain-dead test zombie.

If you’re building in this space (or vibe coding cool things!), please reach out—would love to grab coffee in SF!

Until then, you can find me…living out my NPC fantasies and surrendering (all of my important life decisions) to the dice. 🎲

App Store: https://apps.apple.com/us/app/surrender-npc/id6737744936

Landing Page: https://surrender.fly.dev/

Actually, just kidding. No one asked me this question in real life so this is entirely unsolicited.

I had a tech elder (ok…they were only a decade older than me but definitely part of the old guard) dismiss my “vibe coding” of Surrender as “not real coding”. The appropriate response to this is: “OK BOOMER— do you even CMD+I?”

Some specific pain points w/ Expo:

Prior Version Documentation Gaps—Expo recently pulled out the Camera/Video library into separate libraries which was a headache because Claude seemed to be generating incorrect code based on outdated docs / prior versions of the library.

API key management— I ended up using the credentials management Expo has natively but it was very hard to debug when it wasn’t pulling keys correctly (problem only shows up when building the app in their cloud build platform Expo Application Services (EAS))

Build times—As mentioned, I was using a number of native libraries that required me to rebuild the app to run it on device which lengthened the time to test features (build time x new features increases pretty exponentially). I feel like you end up testing a lot more using AI-generated code because you don’t trust what the AI is doing and you want to see it to make sure it worked / didn’t break anything else.

For example, Replit already takes a screenshot of the app when it asks the user to test functionality so it’s not hard to envision a world where it sends this to a multi-modal LLM or uses Operator / Browser Use to automate test clicks.

I can see some scenarios where companies may have an adverse incentive to slow developers down (e.g. getting manual API tokens allows companies to regulate who has access to their APIs) but for most companies, it feels advantageous to make their interfaces as programmable as possible in this world.

Integrated development environment

People are building a ton of cookbooks for this at https://cursor.directory/

I was a big Sublime user for a while which honestly isn’t even an IDE but I still used it out of habit.